Human Debt™

The invisible accumulation of organisational strain, misalignment, and decision friction

Human Debt is the accumulation of unspoken and unmeasured people-related risk. It builds through fear of consequences, silence, masking, burnout, and erosion of trust.

Like technical or financial debt, it compounds and drives them both up.

Human Debt is a leading indicator of execution failure in AI, transformation, and regulated environments.

Human Debt is not an HR construct. It is an execution risk construct.

These artefacts support executive and governance decision-making, not team-level performance management.

Human Debt™ is a framework originated by Duena Blomstrom. This page describes its institutional application.

The Human Risk Blind Spot

Traditional HR metrics lag outcomes. Engagement surveys lack depth. Audits reveal only what has already gone wrong.

Lagging Indicators

Governance relies on delivery, technical performance, and financial burn. Risks are only seen after the fact.

The Invisible Problem

Crucial early warnings are missed when people hesitate to speak up, challenge assumptions, or escalate concerns.

Inevitable Failure

These unseen issues lead to inevitable, costly failures that remain hidden until it's too late.

Boards and executives remain accountable without visibility into the human system.

You cannot govern risks you cannot see.

PeopleNotTech closes this gap with real-time human risk telemetry.

Solution Views

Unmanaged Human Debt Consequences

Unmanaged Human Debt doesn't slow organisations down in the AI era — it devastates them

AI Adoption Resistance

Progress stalls as teams resist change

Dangerous Tech Debt

Immeasurable and accumulating technical risk

Transformation Drift

Strategic initiatives get derailed

Hidden Errors

Silent undermining of operations

Compliance Breaches

Leading to severe penalties

Attrition Spikes

Depleting talent and morale

What We Provide

Human Risk Governance — not culture management. This is execution risk control.

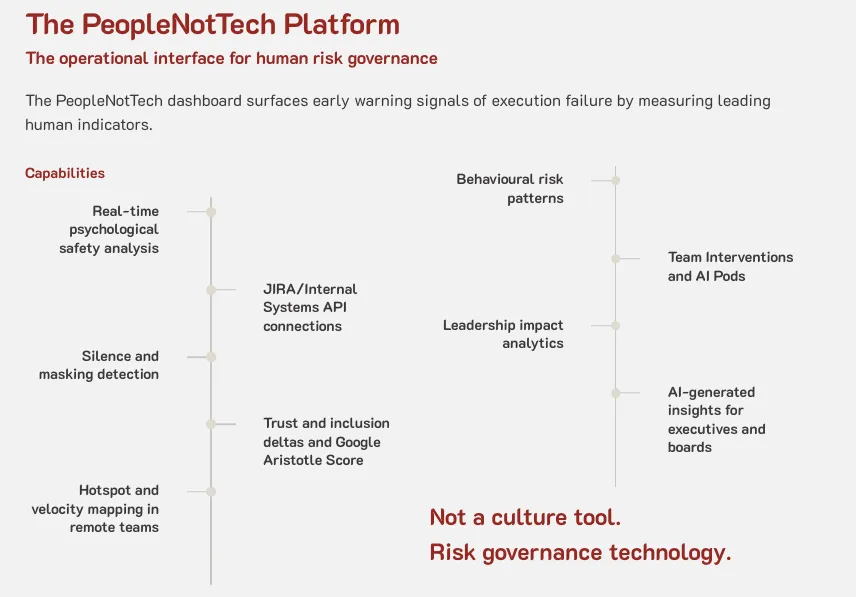

These capabilities exist to translate human behaviour into decision-grade risk signals.

Early-Warning Diagnostics

Detect human risk before it impacts outcomes

Quantified Signals

Real metrics, not surveys or sentiment

Board-Ready Visibility

Clear view into execution strain for leadership

Clear Interventions

Act before failure becomes visible

What Leaders Can Do

- Hidden failure patterns

- Behavioural resistance

- Transformation drift

- Compliance vulnerabilities

- Human Debt levels

- Risk velocity and trendlines

- Thresholds and inflection points

- Impact of leadership actions

- CIO and COO reporting

- Risk committee oversight

- Board-level visibility

- Organisational solvency monitoring

Proven in Practice: From Human Debt to Human Risk Infrastructure

Global research now confirms what practitioners recognised early: AI success is constrained by human systems, not tools.

PeopleNotTech is an organisation focused on surfacing and governing execution risk in AI, transformation, and large-scale change.

It applies the Human Debt™ concept—originated by Duena Blomstrom—to institutional diagnostics, signal tools, and decision frameworks.

Human Debt™ is the organisational risk that accumulates when human systems — trust, psychological safety, accountability, and exposure — are neglected during AI and technological transformation.

The concept of Human Debt™ was originated by Duena Blomstrom to describe the invisible, compounding risk that builds when people stop speaking up, concerns go unmeasured, and execution reality diverges from executive intent.

Long before AI failure rates, post-deployment underperformance, and stalled transformations became widely acknowledged, this work focused on:

- Human risk as a first-class organisational liability

- Why performance collapses after transformation launches

- The widening gap between executive strategy and lived team reality

- How fear, silence, and unmanaged exposure shape execution outcomes

From Concept to Infrastructure

PeopleNotTech applies Human Debt™ institutionally, building human risk infrastructure that moves the concept beyond theory, surveys, sentiment tracking, or workshop-based interventions.

This infrastructure includes:

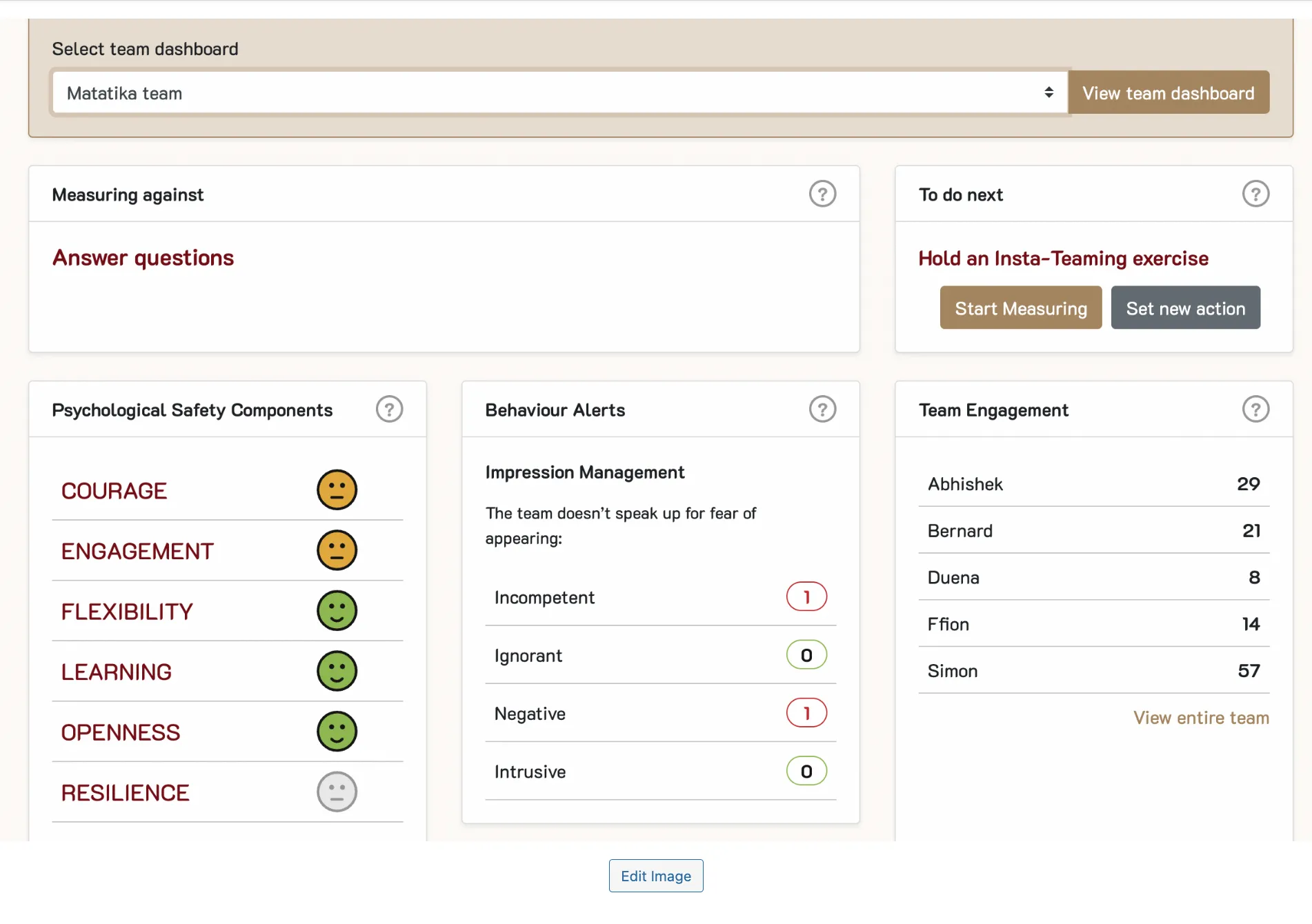

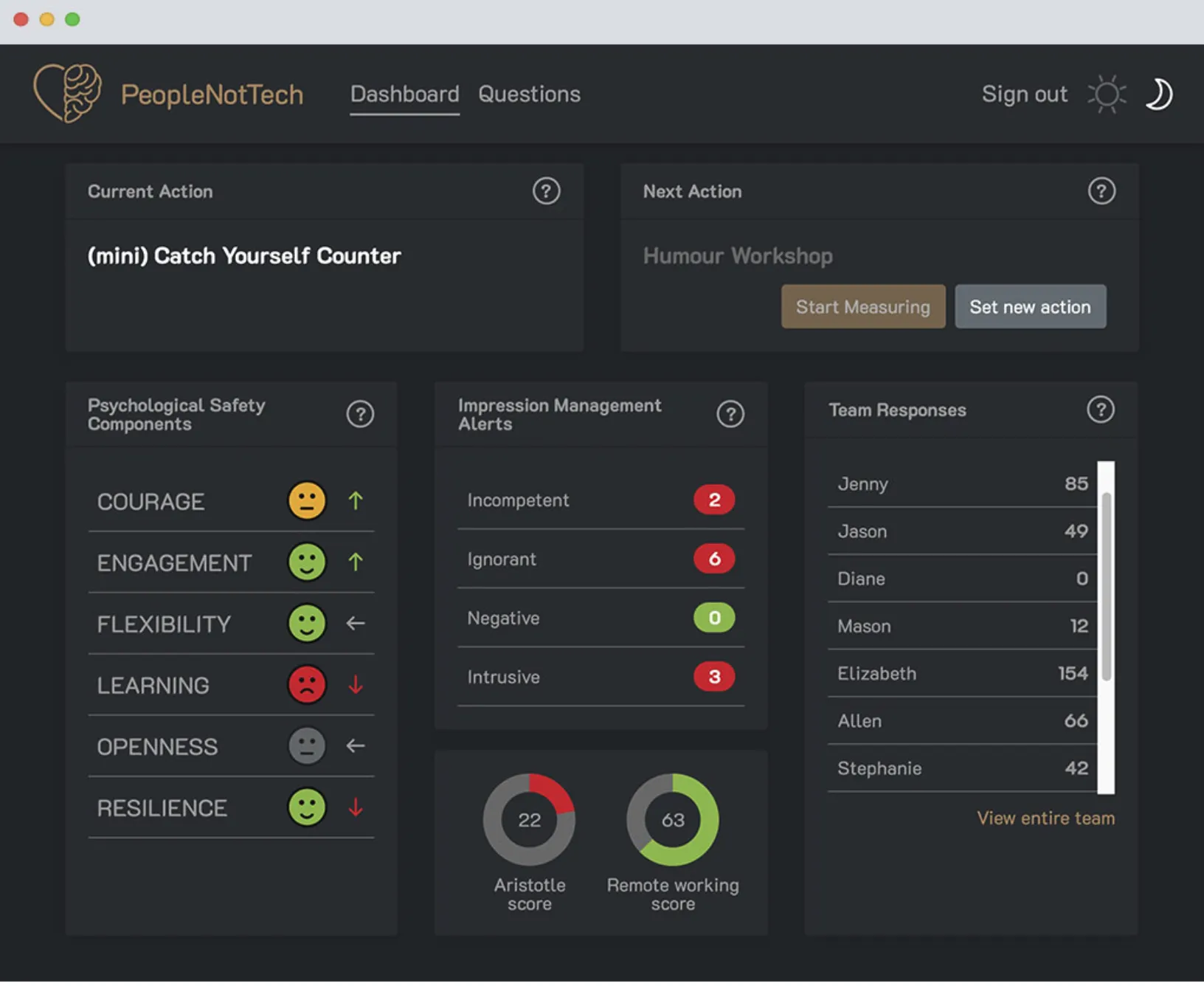

- Behavioural measurement engines for environments where psychological safety is under constant pressure — informed by research such as Harvard Professor Amy Edmondson's work and Google's Project Aristotle, but engineered for operational reality beyond academic models

- High-performing team dashboards translating lived experience into execution-relevant signals

- Shared success metrics embedded directly into Agile and transformation programmes

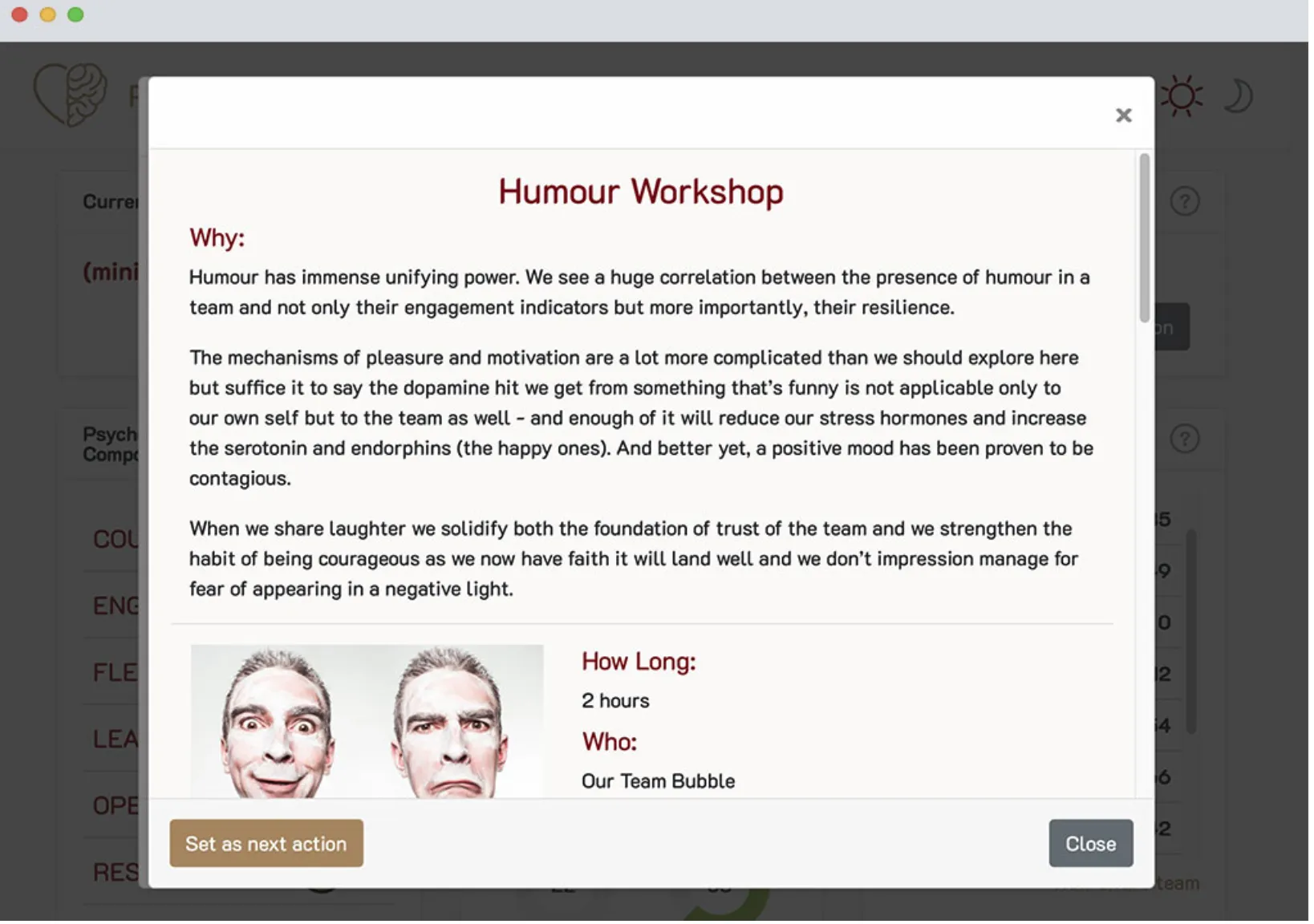

- Psychological safety improvement methods designed for delivery environments, not training rooms

- Human-centred risk models linking behaviour, exposure, and decision-making to execution outcomes

- AI-assisted signal detection surfacing silence, masking, and emerging failure patterns before outcomes degrade

- Governance frameworks translating human dynamics into decision-grade insight for executives, boards, and regulators

This work turns culture, behaviour, and psychological safety into measurable, governable human risk intelligence.

Practitioner Validation in High-Pressure Environments

Human Debt™ instrumentation — as applied institutionally by PeopleNotTech — has been used inside Google-scale technology environments, hyperscale platforms, global enterprises, and fast-moving organisations where AI-assisted development, code visibility, continuous review, and human exposure are daily operational realities — not abstract research variables.

PeopleNotTech has been used by leaders across industries including Novartis, Sports Direct, Matatika, and FedEx to detect and prevent execution failure before it impacts outcomes.

Why This Authority Matters

PeopleNotTech does not rely on opinion, personality, or consultancy credibility.

It stands at the intersection of:

- A concept originated by Duena Blomstrom (Human Debt™)

- Global research convergence

- Institutional application inside real organisations

That is why PeopleNotTech is not another AI transformation vendor. It is human risk infrastructure for organisations that need AI to work — in reality, not theory.

Trusted by forward-thinking leaders across industries and geographies to detect and prevent execution failure before it impacts outcomes.

What Leaders Say